Autonomous exploration and mapping

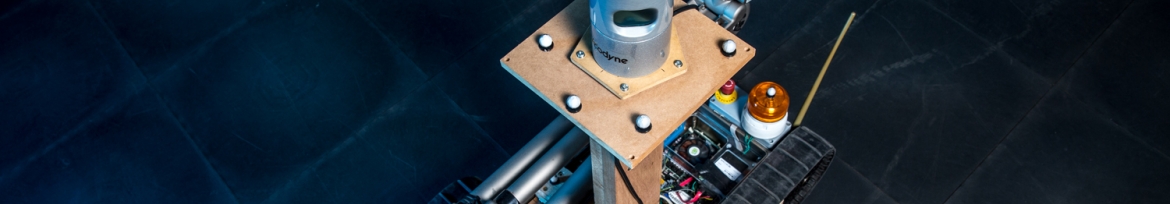

The CSIR developed an experimental system for autonomous exploration and mapping in which a robot autonomously explores an unknown environment and produces a map. The system uses a PackBot 510, from Endeavor Robotics, USA, which is a non-autonomous platform, normally driven by an operator using a joystick. To provide a foundation for autonomous operation, the team installed a Core-i7 computer to process sensor inputs and to run CSIR-developed autonomy software. Sensors include the robot’s built-in odometry, to which the team added an inertial measurement unit (IMU) and a Velodyne HDL-32E three-dimensional (3D) laser scanner. The robot system is suitable for use in Global Positioning System (GPS)-denied environments as a GPS sensor is not required. The system software is based on the Robot Operating System, widely used for academic and industrial robotics development.

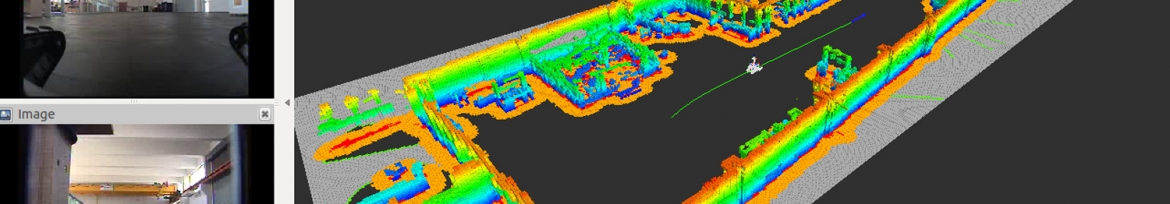

The primary computer vision sensor is the laser scanner, which provides a representation of the environment as a point cloud, identifying locations where returns have been received from the laser scans. Point clouds are produced by the laser scanner at a rate of several times per second and a Simultaneous Localisation and Mapping (SLAM) algorithm is used to accumulate successive point clouds into a single representation of the environment, building a 3D map. SLAM is a key class of algorithms where sensed data are used to form the map and simultaneously determine the location of the robot within the map.

To autonomously move from one point to another, the robot uses its map to plan an obstacle-free path from its current position to the destination point. It then computes the motor drive controls that will allow it to follow the path. As the robot moves, the laser scanner input is used for location estimation and also for collision detection. If a blocked path is detected, the robot plans and follows a new, obstacle-fee path.

For autonomous exploration, the robot uses its map to identify a region that has not yet been viewed with the laser scanner. It then moves to a position where the region can be observed and accumulates the scanned information to its map. This process is continued until the robot has mapped all accessible regions of the environment.

The map created by the robot, along with video from two forward-looking cameras, is supplied to an operator console via a wireless communications link. From this console, an operator has control to start, stop or redirect the robot.

This experimental system demonstrates capability in the design and development of computer vision systems and of autonomous systems, in general. It lays the foundation for directed research in these areas and for the development of systems for specific, industrial applications.